ETL

In this project, we have built an ETL pipeline in python, which extracts weather data from an API using the requests library, transformsthe obtained data using Pandas and NumPy, and at the end loads the data into a DynamoDB table with the awswrangler library.

Before Starting

For this project, we will use the API of meteoblue.com, in which we are given an API key to perform API calls, that key will be saved in a config.py file.

Extract

Mateoblue.com has a lot of weather related options, we chose the 7 days weather option

and the

air quality option.

In the code below, we are getting the data

Transform

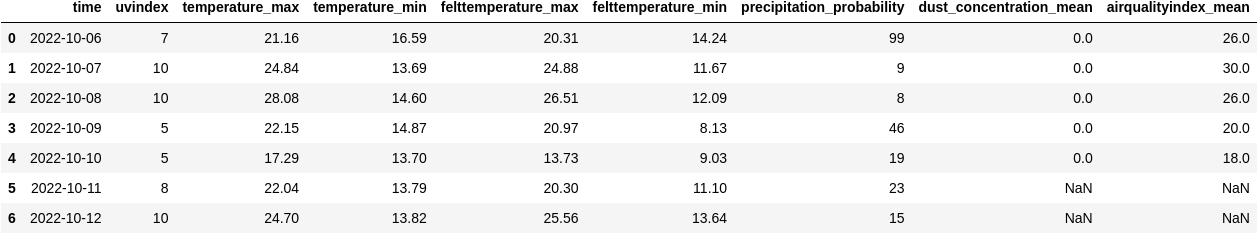

To transform the data we use pandas with numpy, so before converting the JSON into a dataframe, we choose the values we are going to work with

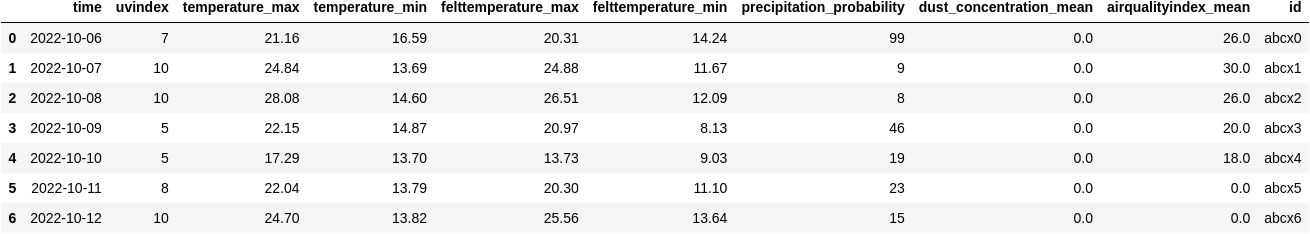

This data will be loaded into a table in DynamoDB, so we replace all the NaN values and add the id column, the id has to be a hash

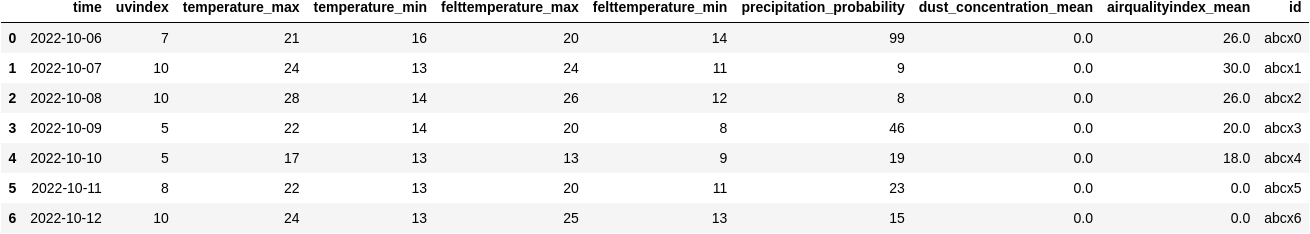

We transform the temperature and felt temperature values into integers to show the data more clearly and with the precipitation and dust concentration values we convert them into object types.

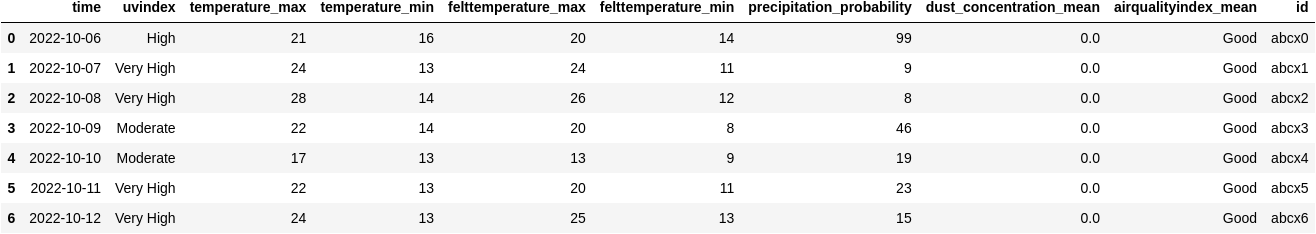

For the uv index and air quality columns, we classify them according to their percentages

Load

AWS Data Wrangler is AWS SDK for pandas (awswrangler), we will use it to load our dataframe in a table in DynamoDB

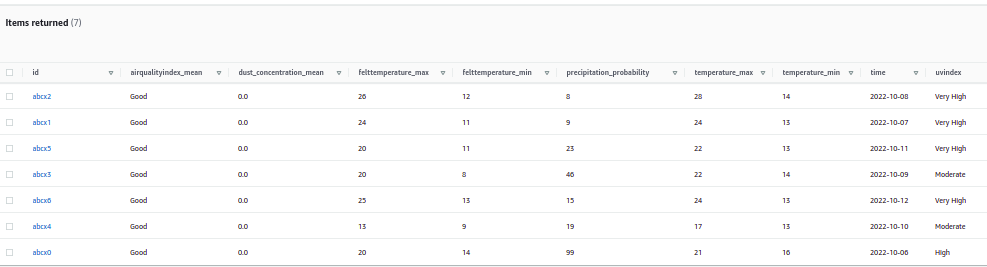

After finishing the process, the result

This ETL has been built in a way, but there are other very powerful ways, for example in the extraction process, you can have several sources of information and in the transformation process use them to combine them and have useful information, and at the end save them in another DB or make graphs.